Does Australia’s AI Future Lie Beyond the LLM Hype?

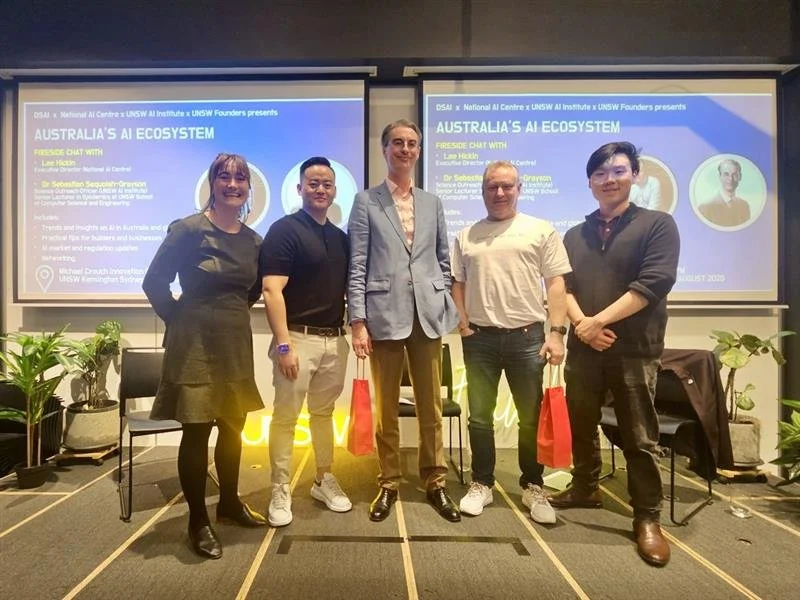

From left to right: Nina Juhl, Senior Manager (Curiosity) UNSW Founders, Michael Wang CEO DSAi, Dr Sebastien Sequoiah-Grayson, Science Outreach Officer at UNSW AI Institute and Senior Lecturer in Epistemics at UNSW School of Computer Science and Engineering, Lee Hickin, Executive Director of the National AI Centre and Raymond Sun, Director DSAi.

8 September 2025

Dr Kate Gwynne is Practical AI Program Manager at UNSW Founders.

Over the last few weeks it’s been hard to miss the heated debate around the subject of sovereign Large Language Models (LLMs).

LLMs, such as ChatGPT and Llama, refer to AI models trained on extensive data to comprehend and generate language. Seeing countries like Sweden launch their own open-source models, we seem to be experiencing a rising sense of FOMO-like a group of toddlers waiting for a go on the swing, anxious if we’ll ever actually get a turn. As AI search replaces traditional engines, it’s hard not to feel frustration when results fail to recognise the interests inherent to my geography, or worse, the possibility that they are purposefully being overlooked.

Given my work advising startups on how to leverage AI, I’ve followed this discussion closely, and the most recent “Australia’s AI Ecosystem” event provided an opportunity to dig deeper into the pertinent issues surrounding this and related developments.

In a fireside chat hosted by the Data Science and Ai Association of Australia (DSAi) in collaboration with UNSW Founders, we heard from Lee Hickin, Executive Director of the National AI Centre, and Dr Sebastien Sequoiah-Grayson, Science Outreach Officer at UNSW AI Institute and Senior Lecturer in Epistemics at UNSW School of Computer Science and Engineering.

The room was jam-packed-with people and ideas-the conversation spanning many topics.

Here’s my wrap up and highlights from the conversation.

Sovereignty and AI:

Lee challenged the idea that sovereignty and AI should centre on locally built LLMs. Instead, he urged us to ask: What real value would a sovereign LLM give us?

Sovereignty, Lee argued, is less about Australia owning a single LLM and more about building the muscle of capability-people, infrastructure, resources. Think of it like filmmaking. Owning a single camera doesn’t mean a country has a film industry. You need directors, actors, set designers, editors, distribution networks, and audiences. His suggestion: we should be investing in ecosystems and relationships so Australia can support itself if the need arises.

An AI researcher in the audience echoed this sentiment, explaining how most of their current work is focused not on LLMs but on other branches of machine learning. LLMs are only one piece of the larger AI puzzle, and if our focus rests solely there, we risk missing other equally valid opportunities for innovation.

Australia’s Unique Advantages:

Sebastien reminded us not to forget our geographic position: we are a western nation in Asia, which gives us a particular strategic role in the ‘Asian century.’

Since most of us rely on American AI infrastructure, the opportunities here seem under explored. Our other strengths are undeniable: vast land and cheap renewable energy for data centres, world-leading research, a strong problem-solving culture, and unique data sets in areas such as autonomous vehicles and healthcare. Sebastien also emphasised that the best way to progress innovation is through a culture of collaboration: someone always knows someone and it’s remarkable what can be achieved when everyone is in the room together.

Jobs – the Future Winners and Losers:

The conversation then moved to economic and social impact, with one attendee raising concerns about jobs. On this point, Lee was clear: we might lose five jobs, but create ten.

Many tasks AI replaces are the “3 Ds”: dirty, dull, and dangerous. Sebastien agreed, adding that hype cycles of fear are often wrong: radiographers, once predicted to become obsolete, are now in short supply. Still, he cautioned that the pace of change may outstrip our ability to adapt.

Having worked through three digital transformations and found opportunity in each, I have personally come to see possibility in change. But we must ensure that support exists to help people pivot and upskill. Again, this comes back to the ecosystem we need to nurture. Both Lee and Sebastien stressed the importance of AI literacy-not only to overcome fear and uncertainty but also to help all businesses adopt AI practices that deliver the greatest value.

Most importantly, we must focus on applying AI to solve real problems rather than building tools in search of problems. This is easy to forget given all the hype around AI but just because we are seeing productivity benefits in areas like generative and agentic AI, it is important to frame technologies within human-centric methodologies. A common problem we need to avoid is the possibility that a solution which addresses a problem for one stakeholder creates a new one for someone else. Regarding unintended consequences, Sebastien underlined the importance of developing the skill to check AI outputs, sometimes even speaking them out loud as a form of double validation. The larger point here of course is the risk of AI becoming a crutch. We need to ensure that AI literacy does not replace critical thinking, ideally we should use AI to enhance it.

Regulation & Governance:

On regulation, Lee raised significant points in favour of a lighter touch. Poorly designed or premature laws risk stifling innovation. The EU, which has taken a risk-based approach to AI regulation through the AI Act was cited as a cautionary example, resulting in heavier compliance costs and slower time-to-market for companies like startups. Regulation, Lee argued, should focus on use and interaction, not on the technology itself. He supported the Australian approach which has focused more on voluntary frameworks and guidelines which have been adopted willingly by a range of local institutions. For all our FOMO, Australia’s more moderate stance on regulation has yielded advantages, allowing for faster experimentation and adoption.

Market Dynamics and Open Source:

As we look to the future, will big companies consolidate and dominate the market? Maybe not, argued Lee, noting that open-source AI is increasingly powerful and collaborative, and may serve as a useful counterbalance. Earlier this year DeepSeek, developed by a Chinese AI company, made headlines for launching a model that rivalled ChatGPT using a fraction of the team and budget. Hope exists for more distributed markets if open source and SMEs flourish. Another point raised was how privacy is a shifting, not static, phenomenon. What future generations are comfortable with may be very different from our own expectations.

We are at an important inflection point that will determine Australia’s innovation trajectory, and the social and industrial impact of AI over the next five to ten years. There are many reasons to support the development of sovereign LLM infrastructure, however history shows that narrow approaches to innovation rarely yield the needed results. We must remain open-minded and optimistic. Solutions often emerge from niche or overlooked spaces-from those tackling problems most people ignore. In the meantime, bringing diverse viewpoints together to challenge one another has never been more important. After all, what is technological innovation good for, if not questioning the status quo?

Dr Kate Gwynne is Practical AI Program Manager at UNSW Founders.

She leads upskill initiatives to arm startups and the broader UNSW Community with the tools and knowledge they need to embrace the possibilities of AI.

You can book a 1:1 with her directly via the UNSW Founders Community Coach and Connect page.